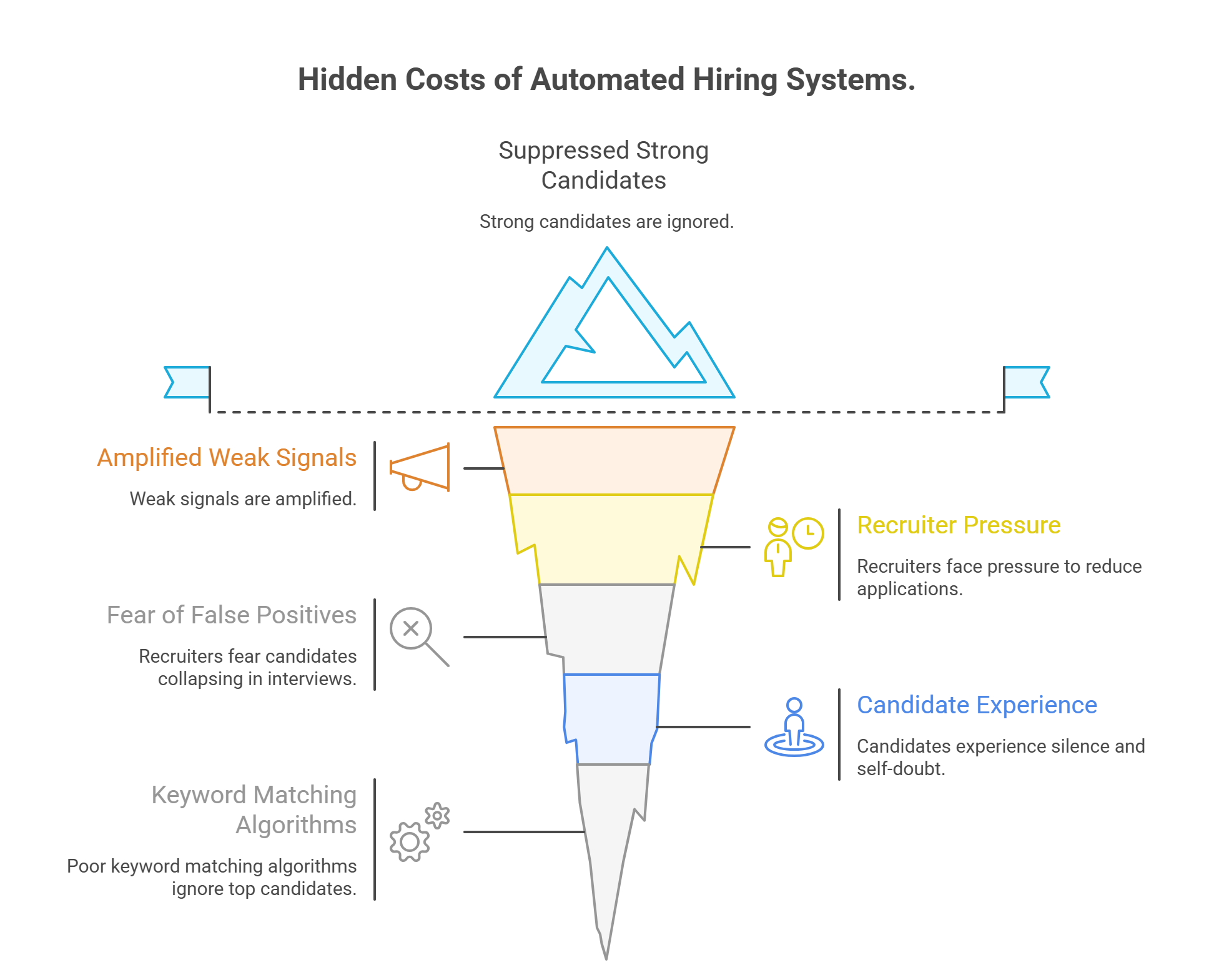

What actually happens inside modern hiring systems

Why strong candidates disappear and weak signals get louder

Most enterprise hiring teams today rely on applicant tracking systems and AI matching tools

as the first line of filtering. In many cases, multiple systems are layered together.

Recruiters often told us, “I do not fully trust the score, but I need it to manage volume.”

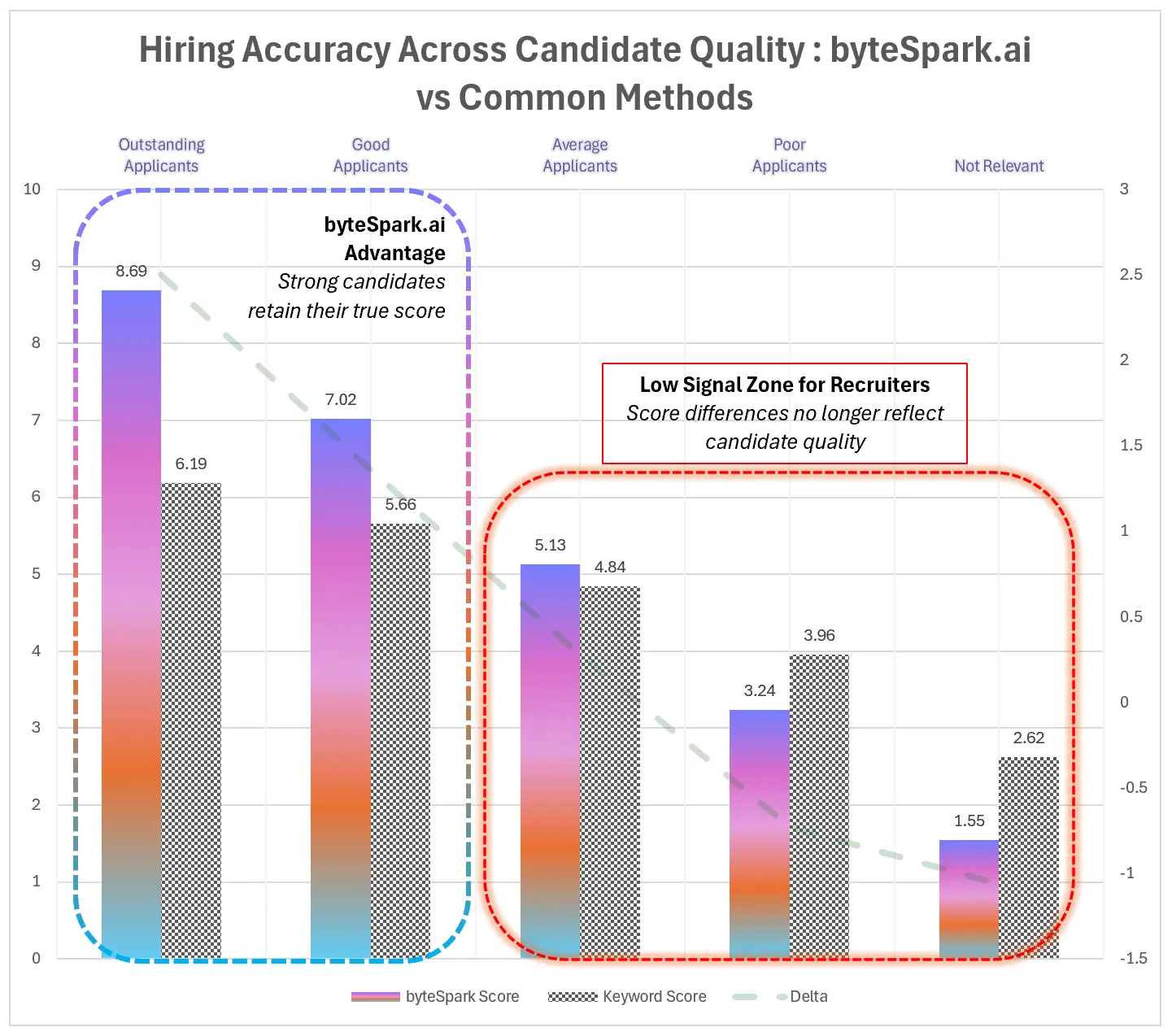

Across our work, we benchmarked outcomes where teams were already using well known enterprise

and agency-grade recruitment platforms. These systems were trusted, paid for, and embedded

into daily workflows.

The platforms benchmarked were Phenom, Eightfold, Lever, SmartRecruiters, SAP SF, Oracle Fusion, Avanture, Zoho Recruit, Recruitee and one more (we can’t remember the name now).

The integration setup was done in a way that a job role was created on both platforms. When a candidate applied for a role, the data provided by the candidate including the CV was passed on to both the platform the enterprise was using and the byteSpark.ai platform in parallel. The data was then studied to provide a gap analysis.

We did not replace them.

We ran alongside them.

The average number of applicants for each role was above 400 giving us a healthy population to run our stat analysis. An interesting finding, shown in the chart, was the inversion as candidate quality got poorer from left to right. Where the score of high candidates was being supressed, the same did not happen for poor candidates. Instead, their score came up better than deserved.

It is common place, “People who do not have the merit get the top jobs because they have the gift of the gab” – now we know why.

The fear recruiters rarely say out loud

Recruiters are under constant pressure to reduce thousands of applications quickly,

avoid missing obvious matches, and keep hiring moving without slowing the business.

What they fear most is a false positive.

A candidate who looks strong on paper, passes automated screening,

reaches interview, and then collapses.

False positives waste time, credibility, and momentum.

So many systems are tuned conservatively to avoid them.

That decision has a hidden cost.

What candidates experience instead

When systems are tuned to avoid false positives,

they quietly create false negatives.

These are strong candidates with real depth,

real delivery, and real experience

that does not compress neatly into keywords.

They do not fail loudly.

They disappear silently.

From the candidate side, it feels like this:

- You apply and meet the requirements

- You never hear back

- You start questioning your own ability

This is why applying for jobs feels like a black hole.

Candidates often tell us, “It looks like they read my CV to build that job post, but I still never heard back.”

What the data shows when you group candidates by quality

When we grouped candidates by overall strength and compared how different approaches scored them,

three patterns became impossible to ignore.

- Strong candidates get suppressed. Scores compress at the top, where separation matters most.

- Average candidates all start to look the same.

- Noise from poor candidates gets amplified.

In one example, we had a candidate score 4 out of 10 with our AI scoring. But with the right amount of keyword stuffing they had a score of 8.7 out of 10. This meant that the recruiter would have wasted their time.

Another example showed a candidate get an AI score of 9.2 but they keyword score was 2.1. This is a huge delta. It means that this candidate would have been ignored. This is where most of us fall.

Our research shows that 39.8% of top candidates are ignored due to poor keyword matching algorithms.

The uncomfortable truth

Recruiters are not ignoring good candidates.

Candidates are not imagining the problem.

And existing systems are not broken.

They are doing exactly what they were designed to do.

The issue is that scoring for volume control is not the same as scoring for hiring accuracy.

A hiring score is only useful if it preserves signal at the top.

This gap is why byteSpark.ai exists.

Not to replace existing systems,

but to restore signal where it matters most.